Saw a great video pop up on Digg a little while ago, less Mac-based then the last one, and this time proving that there is a point to Second Life (even if its not quite the samauri sword filled metaverse that we might have hoped for).

This video by Robbie Dingo is based on The Starry Night by Van Gogh, and underlines that not everything in Second Life is real.

Or something :-/

My Blog has moved!

Thanks for visiting, but my blog has now moved to a new home at www.davidmillard.org, if you have javascript enabled you should automatically be redirected to the right place, if not then please follow the link directly to my new home page.

Monday, August 18, 2008

Sunday, July 27, 2008

Duck Taped by Apple in California

Why is the brilliance of the new iPhone 3G so intangible? When I try and explain why I've replaced one 3G, GPS enabled, touch screen smart phone (a TyTN II) with another I get blank stares. When I show people how it works, they nod and smile as if to say that this is just what they expected - all perfectly normal.

So how come I feel like I've made such a technical leap forward - how come this simple device blows me away?

Last week I could browse the internet, I could watch videos, play games, listen to podcasts or my favorite albums, I could read my email and even make phone calls if I had to. Last week I could do all the things that I can do this week, but this week I have a smile on my face as I do them. Why?

It's taken me a week of thinking about it to come up with an answer. I think its exactly because I had a similar device before that the iPhone seems so incredible. In 2003 I opted out of consumer mobile devices. I replaced my Nokia handset and aging Palm V with a PocketPC PDA (the HTC Magician) and have been using a variant of PocketPC ever since. I was always impressed by the technology crammed into them, but friends of mine weren't. They'd look at the clunky phone interface and compare it to their latest handsets, raise a disappointed eyebrow at the media player and point out their iPod, or shrug in apathy at the simple games that were available before picking up their PSP.

In short the PocketPC was a jack of all trades, but master of none. There was no way it could compare with the single function devices in the consumer electronics market. It was a generic computer doing its best.

And this is why the iPhone blows me away. Because its the first all-in-one device that genuinely competes with other consumer electronics. Its not a generic computer at all, its a single box with a phone interface as good as any handset, an mp3 player as good as an iPod, a games engine as good as a PSP (well - as good as a Nintendo DS anyway :-), organisers as good as a PDA, and its piece de la resistance - a web browser that's actually as good as a desktop client. Imagine 5 or 6 consumer gadgets all duck taped together and you're about there.

That's why when you show it to your friends they will shrug, whatever application you show them will be of the same standard that they are used to on their dedicated devices. And its also why some techies don't get it, because on paper the iPhone is no better than the devices that have come before - and in their eyes it might even be worse (after all it only runs on one hardware platform, doesn't allow you to multitask properly, and its all a bit too tightly controlled by Apple).

But after five cold and lonely years I have returned to the consumer electronics fold, and on reflection it's nice to be back.

The iPhone is a jack of some trades, but master of them all.

So how come I feel like I've made such a technical leap forward - how come this simple device blows me away?

Last week I could browse the internet, I could watch videos, play games, listen to podcasts or my favorite albums, I could read my email and even make phone calls if I had to. Last week I could do all the things that I can do this week, but this week I have a smile on my face as I do them. Why?

It's taken me a week of thinking about it to come up with an answer. I think its exactly because I had a similar device before that the iPhone seems so incredible. In 2003 I opted out of consumer mobile devices. I replaced my Nokia handset and aging Palm V with a PocketPC PDA (the HTC Magician) and have been using a variant of PocketPC ever since. I was always impressed by the technology crammed into them, but friends of mine weren't. They'd look at the clunky phone interface and compare it to their latest handsets, raise a disappointed eyebrow at the media player and point out their iPod, or shrug in apathy at the simple games that were available before picking up their PSP.

In short the PocketPC was a jack of all trades, but master of none. There was no way it could compare with the single function devices in the consumer electronics market. It was a generic computer doing its best.

And this is why the iPhone blows me away. Because its the first all-in-one device that genuinely competes with other consumer electronics. Its not a generic computer at all, its a single box with a phone interface as good as any handset, an mp3 player as good as an iPod, a games engine as good as a PSP (well - as good as a Nintendo DS anyway :-), organisers as good as a PDA, and its piece de la resistance - a web browser that's actually as good as a desktop client. Imagine 5 or 6 consumer gadgets all duck taped together and you're about there.

That's why when you show it to your friends they will shrug, whatever application you show them will be of the same standard that they are used to on their dedicated devices. And its also why some techies don't get it, because on paper the iPhone is no better than the devices that have come before - and in their eyes it might even be worse (after all it only runs on one hardware platform, doesn't allow you to multitask properly, and its all a bit too tightly controlled by Apple).

But after five cold and lonely years I have returned to the consumer electronics fold, and on reflection it's nice to be back.

The iPhone is a jack of some trades, but master of them all.

Wednesday, July 16, 2008

Open Ports for Open Minds

I'm at the JISC Innovation Forum this week. The Forum is a chance for people working for and funded by JISC to get together and discuss the big challenges in HE, FE and e-learning. The event is arranged around a number of discussion sessions, panels and forum (so its unlike a traditional conference as its not about individual work, but the lessons that we can learn as a community).

Yesterday we kicked off with a session about potential future directions for JISC, my suggestion was that JISC should concentrate on helping Universities manage the new wave of technology - not by promoting that they adopt it, but by encouraging them to simply move aside, and allow their students and staff some flexibility and freedom.

This has been exemplified by the WiFi network available to us at Keele University campus where the event is based. Each user requires an individual login and password, and is required to download a mysterious Java app that somehow negotiates access for you. Once connected you are restricted to http and https requests (no imap or vpn for example), and the java app frequently falls over and gets disconnected, requiring you to kill it (quit doesn't work) and then re-run it (although sometimes this results in you being denied access for a few moments, presumably while you wait for your MAC address to be cleared from some cache somewhere).

This is utter madness, a large neon sign that says "we have been required to offer you this service, but don't trust you and would rather you didn't use it". By making the experience so difficult they could put off a great deal of casual use, by locking the firewall down so tightly they force you use awkward web alternatives to the tools that you may be used to, and by requiring this bizarre java stage they ensure that only laptops (no phones or pdas) can access the network.

I've noticed a trend that students are beginning to abandon University e-learning infrastructure because it is too restrictive, and moving to public offsite facilities (such as Google Groups and Mail), but this is surely a good way of making students opt-out of the physical infrastructure too! If I was a student at Keele I would simply buy myself a mobile broadband connection and never use the local system.

JISC's greatest challenge is to get this sort of restrictive practice reversed, so that Universities can start to offer proper IT services to their students in such a way that experimentation and innovation can occur.

I can think of plenty of other examples. We have a colleague working at the University of Portsmouth who is blocked from accessing YouTube on his Uni network, making it impossible for him to access valuable teaching resources (he teaches languages and YouTube is a rich resource of material). At our own University (of Southampton) the central email systems have recently been overhauled, and the ability to set up email forwards to external accounts has been removed. Staff use the University email accounts as points of contact with students (its impossible to keep track of so many other accounts), and so this now forces students to maintain and check two accounts, rather than the one that they may have used for years.

Networks need to be managed, and damaging or illegal activity needs to be controlled or stopped - but the default policy should be to support openness.

Of course it's as much about open minds as open ports. We have to start respecting the autonomy of our staff and learners. By all means monitor the network and close down services or block ports that develop into a problem (as Napster did a few years ago), but give people the freedom to integrate their existing digital environment and personal gear as they see fit.

The alternative is to see them opt out altogether.

Yesterday we kicked off with a session about potential future directions for JISC, my suggestion was that JISC should concentrate on helping Universities manage the new wave of technology - not by promoting that they adopt it, but by encouraging them to simply move aside, and allow their students and staff some flexibility and freedom.

This has been exemplified by the WiFi network available to us at Keele University campus where the event is based. Each user requires an individual login and password, and is required to download a mysterious Java app that somehow negotiates access for you. Once connected you are restricted to http and https requests (no imap or vpn for example), and the java app frequently falls over and gets disconnected, requiring you to kill it (quit doesn't work) and then re-run it (although sometimes this results in you being denied access for a few moments, presumably while you wait for your MAC address to be cleared from some cache somewhere).

This is utter madness, a large neon sign that says "we have been required to offer you this service, but don't trust you and would rather you didn't use it". By making the experience so difficult they could put off a great deal of casual use, by locking the firewall down so tightly they force you use awkward web alternatives to the tools that you may be used to, and by requiring this bizarre java stage they ensure that only laptops (no phones or pdas) can access the network.

I've noticed a trend that students are beginning to abandon University e-learning infrastructure because it is too restrictive, and moving to public offsite facilities (such as Google Groups and Mail), but this is surely a good way of making students opt-out of the physical infrastructure too! If I was a student at Keele I would simply buy myself a mobile broadband connection and never use the local system.

JISC's greatest challenge is to get this sort of restrictive practice reversed, so that Universities can start to offer proper IT services to their students in such a way that experimentation and innovation can occur.

I can think of plenty of other examples. We have a colleague working at the University of Portsmouth who is blocked from accessing YouTube on his Uni network, making it impossible for him to access valuable teaching resources (he teaches languages and YouTube is a rich resource of material). At our own University (of Southampton) the central email systems have recently been overhauled, and the ability to set up email forwards to external accounts has been removed. Staff use the University email accounts as points of contact with students (its impossible to keep track of so many other accounts), and so this now forces students to maintain and check two accounts, rather than the one that they may have used for years.

Networks need to be managed, and damaging or illegal activity needs to be controlled or stopped - but the default policy should be to support openness.

Of course it's as much about open minds as open ports. We have to start respecting the autonomy of our staff and learners. By all means monitor the network and close down services or block ports that develop into a problem (as Napster did a few years ago), but give people the freedom to integrate their existing digital environment and personal gear as they see fit.

The alternative is to see them opt out altogether.

Thursday, July 10, 2008

ICALT 2008 - A Cottage Conference?

Last week I was at IEEE ICALT 2008, held in Santander,Spain. Last year's conference was a bit of a wakeup call for me, partly because of Mark Eisenstadt's wonderful keynote, and partly because of the realisation of just how quickly Web trends were making much of the presented work obsolete.

This year the community seems to have noticed the change of pace, and although no wonderful answers were presented, at least we heard some of the right questions being asked. The location was pretty fine as well - Santander is a well kept Spanish secret - although, as you can tell from this picture of us outside our hotel, we found the weather tough going (and yes, we really did send that many people :-)

(in fact, there a few people missing from that photo. There were 14 of us from LSL by the 2nd day)

There were some presentations of some neat e-learning tools too, including a tool from the people at the MiGen project for allowing students to construct simple algebraic problems using a graphical editor. It occurs to me that this is actually about teaching abstractions, and might be useful for first year CS and IT students, as well as schoolkids struggling with algebra :-)

My happier assessment of the conference may also have something to do with the fact that one of my PhD students, Asma Ounass, won the best paper award - a brilliant achievement given the size of the conference and the number of papers considered. Asma's paper was on using Semantic Web technology to create student groups, and is available on our School e-prints server.

Probably the most interesting session that I attended was a panel that tried to address the question of 'why technology innovations are still a cottage industry in education' (with Madhumita Bhattacharya, Dragan Gasevic, Jon Dron, Tzu-Chien Liu and Vivekananandan Suresh Kumar).

Some of the panelists took the opportunity to explain why their pet technology or approach was going to save e-learning, however I found Jon Dron's position statement the most compelling. Jon questioned the assumption behind the topic, and asked if having a cottage industry was so bad, and whether we really wanted to industrialise e-learning. The point behind his question is that Higher Education is itself a bit of a cottage industry; a craft with personalised products and highly skilled craftsmen. The danger is that if you wish for industrialised e-learning systems, you may end up with industrialised learning and teaching.

This set me wondering what cottage industries actually look like in a post-industrial society, and whether or not the tools we need for e-learning are similar to the technologies that they use. Thinking about it it seems that they apply mechanisation in the small - administrative tools like MS Office and communication tools like email, web sites, social networks and ebay.

That assessment might be just a result of my own prejudices about e-learning technology, but what the analogy does show is that there may be an assumption driving our e-learning systems: VLEs assume that the industrialisation of learning is a good thing (consistent quality and economies of scale), while PLEs assume that the industrialisation of learning is a bad thing (the ownership of production and inpersonalisation) and rally against it.

What is not yet clear is whether the cloud approach could scale in the same way as a traditional VLE, enabling institutions to support PLEs on a large scale, or whether the diverse set of people, preferences and tools would create unmanageable complexity. I know that this is a concern with our own systems staff, who have to maintain a large number of systems to ensure quality of service, and I've stated before that I feel that the institutional involvement is essential so there's no avoiding the problem by relying on 3rd party systems.

No answers - but at least we've starting asking the questions :-)

This year the community seems to have noticed the change of pace, and although no wonderful answers were presented, at least we heard some of the right questions being asked. The location was pretty fine as well - Santander is a well kept Spanish secret - although, as you can tell from this picture of us outside our hotel, we found the weather tough going (and yes, we really did send that many people :-)

(in fact, there a few people missing from that photo. There were 14 of us from LSL by the 2nd day)

There were some presentations of some neat e-learning tools too, including a tool from the people at the MiGen project for allowing students to construct simple algebraic problems using a graphical editor. It occurs to me that this is actually about teaching abstractions, and might be useful for first year CS and IT students, as well as schoolkids struggling with algebra :-)

My happier assessment of the conference may also have something to do with the fact that one of my PhD students, Asma Ounass, won the best paper award - a brilliant achievement given the size of the conference and the number of papers considered. Asma's paper was on using Semantic Web technology to create student groups, and is available on our School e-prints server.

Probably the most interesting session that I attended was a panel that tried to address the question of 'why technology innovations are still a cottage industry in education' (with Madhumita Bhattacharya, Dragan Gasevic, Jon Dron, Tzu-Chien Liu and Vivekananandan Suresh Kumar).

Some of the panelists took the opportunity to explain why their pet technology or approach was going to save e-learning, however I found Jon Dron's position statement the most compelling. Jon questioned the assumption behind the topic, and asked if having a cottage industry was so bad, and whether we really wanted to industrialise e-learning. The point behind his question is that Higher Education is itself a bit of a cottage industry; a craft with personalised products and highly skilled craftsmen. The danger is that if you wish for industrialised e-learning systems, you may end up with industrialised learning and teaching.

This set me wondering what cottage industries actually look like in a post-industrial society, and whether or not the tools we need for e-learning are similar to the technologies that they use. Thinking about it it seems that they apply mechanisation in the small - administrative tools like MS Office and communication tools like email, web sites, social networks and ebay.

That assessment might be just a result of my own prejudices about e-learning technology, but what the analogy does show is that there may be an assumption driving our e-learning systems: VLEs assume that the industrialisation of learning is a good thing (consistent quality and economies of scale), while PLEs assume that the industrialisation of learning is a bad thing (the ownership of production and inpersonalisation) and rally against it.

What is not yet clear is whether the cloud approach could scale in the same way as a traditional VLE, enabling institutions to support PLEs on a large scale, or whether the diverse set of people, preferences and tools would create unmanageable complexity. I know that this is a concern with our own systems staff, who have to maintain a large number of systems to ensure quality of service, and I've stated before that I feel that the institutional involvement is essential so there's no avoiding the problem by relying on 3rd party systems.

No answers - but at least we've starting asking the questions :-)

Tuesday, July 8, 2008

Free, as in Web Designs

This month I discovered the wonders of free web design. I'm not sure why it never occurred to me before, but it turns out that there is a blossoming community of web designers out there who are in it for the glory, and who make their designs available on sites such as OSWD.org. You can browse the libraries and download a zip package of html templates and css files that you are then free to modify for your own use.

I took the opportunity to revise my own tired homepage, currently celebrating its 12th year of vanity and anonymity, using a design from Node Three Thirty Design (not much on their own page, but there are links to DeviantART and Zeroweb as well).

My website started back in 1996 with a coursework for my degree (on a module which I now run - who says life isn't a circle :-/

The text is probably too small to read, but it's an on-line technical report on digital video. The last bit reads:

The text is probably too small to read, but it's an on-line technical report on digital video. The last bit reads:

Honestly, any more prophesy and I'd be growing a straggly beard, plucking juniper bushes from thin air, and running around following the gourd.

During my PhD I built a homepage that was a kind of a 2d bookmark manager, there was some information about me, but mostly it functioned as a personal hypertext, and a place to put public material (such as teaching notes):

At some point it occurred to me that having some handy search forms on my home page would be a good plan, and so when I became a Research Fellow I added a side bar to search a number of common sites (this was before the days when search had been integrated into browsers):

At some point it occurred to me that having some handy search forms on my home page would be a good plan, and so when I became a Research Fellow I added a side bar to search a number of common sites (this was before the days when search had been integrated into browsers):

If I'd been paying attention I might have generalised this and called the result pageflakes or iGoogle, but I was too busy doing worthy things with Open Hypermedia, and by the time I looked up the boat wasnt even on the horizon any more.

If I'd been paying attention I might have generalised this and called the result pageflakes or iGoogle, but I was too busy doing worthy things with Open Hypermedia, and by the time I looked up the boat wasnt even on the horizon any more.

When I became a lecturer I needed a homepage that better reflected the work that I was doing, as well as functioning as a homepage for my own browser. I therefore set about a re-design, and incorporated this blog as aggregated content:

Undeterred by my iGoogle experience I followed Ted Nelson's example and proceeded to invent other potentially profitable tools to ignore, including a news aggregation page that I kept until Google Reader came along and showed me better:

Undeterred by my iGoogle experience I followed Ted Nelson's example and proceeded to invent other potentially profitable tools to ignore, including a news aggregation page that I kept until Google Reader came along and showed me better:

This website was altogether better structured, with proper delimited sections, a decent page layout, and a design encoded using CSS (although I did cheat and use tables). This design has worked really well for over a year now, but in the Spring I noticed something unfortunate about it - it looks like it was drawn by a six year old :-(

This website was altogether better structured, with proper delimited sections, a decent page layout, and a design encoded using CSS (although I did cheat and use tables). This design has worked really well for over a year now, but in the Spring I noticed something unfortunate about it - it looks like it was drawn by a six year old :-(

The problem is that I just dont have time to do a better job - that would require evenings spent lovingly drawing slightly curved corners and dabbling in CSS voodoo. I was getting despondent - but then came across the Free Web Design People at OSWD, and within a few hours I had a shiny new website. Ok, it took a bit longer to glue the various parts together, and I didnt escape some minor CSS witchcraft, but nothing worth burning anyone over. The result is my current design:

I'm not entirely happy with the moody black and white picture (and my wife thinks it makes me look like I've had a stroke :-( but I'm proud of the fact that despite the fact that I have a Mac now, my homepage photo doesn't involve a polo neck shirt.

I'm not entirely happy with the moody black and white picture (and my wife thinks it makes me look like I've had a stroke :-( but I'm proud of the fact that despite the fact that I have a Mac now, my homepage photo doesn't involve a polo neck shirt.

So the lesson here is that busy geeks of the world you need toil no more! Friendly graphic designers have rescued you from your tardy prisons and 90's vi coded html.

You are free (as in Web Design)!

I took the opportunity to revise my own tired homepage, currently celebrating its 12th year of vanity and anonymity, using a design from Node Three Thirty Design (not much on their own page, but there are links to DeviantART and Zeroweb as well).

My website started back in 1996 with a coursework for my degree (on a module which I now run - who says life isn't a circle :-/

The text is probably too small to read, but it's an on-line technical report on digital video. The last bit reads:

The text is probably too small to read, but it's an on-line technical report on digital video. The last bit reads:"New advances both in compression and storage have meant that TV quality pictures and even High Definition TV pictures could soon be possible and in lengths that would enable people to watch entire movies on their monitors. These benefits are so great that even the average media consumer could soon see digital television sets in their homes.

Add to this the ability to transmit video across a network (or the internet) and video conferencing and video phones also seem to be a possibility"Honestly, any more prophesy and I'd be growing a straggly beard, plucking juniper bushes from thin air, and running around following the gourd.

During my PhD I built a homepage that was a kind of a 2d bookmark manager, there was some information about me, but mostly it functioned as a personal hypertext, and a place to put public material (such as teaching notes):

At some point it occurred to me that having some handy search forms on my home page would be a good plan, and so when I became a Research Fellow I added a side bar to search a number of common sites (this was before the days when search had been integrated into browsers):

At some point it occurred to me that having some handy search forms on my home page would be a good plan, and so when I became a Research Fellow I added a side bar to search a number of common sites (this was before the days when search had been integrated into browsers): If I'd been paying attention I might have generalised this and called the result pageflakes or iGoogle, but I was too busy doing worthy things with Open Hypermedia, and by the time I looked up the boat wasnt even on the horizon any more.

If I'd been paying attention I might have generalised this and called the result pageflakes or iGoogle, but I was too busy doing worthy things with Open Hypermedia, and by the time I looked up the boat wasnt even on the horizon any more.When I became a lecturer I needed a homepage that better reflected the work that I was doing, as well as functioning as a homepage for my own browser. I therefore set about a re-design, and incorporated this blog as aggregated content:

Undeterred by my iGoogle experience I followed Ted Nelson's example and proceeded to invent other potentially profitable tools to ignore, including a news aggregation page that I kept until Google Reader came along and showed me better:

Undeterred by my iGoogle experience I followed Ted Nelson's example and proceeded to invent other potentially profitable tools to ignore, including a news aggregation page that I kept until Google Reader came along and showed me better: This website was altogether better structured, with proper delimited sections, a decent page layout, and a design encoded using CSS (although I did cheat and use tables). This design has worked really well for over a year now, but in the Spring I noticed something unfortunate about it - it looks like it was drawn by a six year old :-(

This website was altogether better structured, with proper delimited sections, a decent page layout, and a design encoded using CSS (although I did cheat and use tables). This design has worked really well for over a year now, but in the Spring I noticed something unfortunate about it - it looks like it was drawn by a six year old :-(The problem is that I just dont have time to do a better job - that would require evenings spent lovingly drawing slightly curved corners and dabbling in CSS voodoo. I was getting despondent - but then came across the Free Web Design People at OSWD, and within a few hours I had a shiny new website. Ok, it took a bit longer to glue the various parts together, and I didnt escape some minor CSS witchcraft, but nothing worth burning anyone over. The result is my current design:

I'm not entirely happy with the moody black and white picture (and my wife thinks it makes me look like I've had a stroke :-( but I'm proud of the fact that despite the fact that I have a Mac now, my homepage photo doesn't involve a polo neck shirt.

I'm not entirely happy with the moody black and white picture (and my wife thinks it makes me look like I've had a stroke :-( but I'm proud of the fact that despite the fact that I have a Mac now, my homepage photo doesn't involve a polo neck shirt.So the lesson here is that busy geeks of the world you need toil no more! Friendly graphic designers have rescued you from your tardy prisons and 90's vi coded html.

You are free (as in Web Design)!

Labels:

homepage,

web design,

websites

Thursday, June 26, 2008

ACM Hypertext 2008 and Web Science Workshop

Update 27-10-08: A more formal version of this blog entry appeared in the SIGWEB Newsletter as the Hypertext 2008 trip report. You can find the full text as a pre-print in the ECS e-prints repository.

I've just got back from this years ACM Hypertext Conference in Pittsburgh, PA. Hypertext '98 was also in Pittsburgh, that was my first academic conference, and also my first trip to the States, so it was interesting to head back there. Pittsburgh seemed smaller this time around, and the culture shock was missing - either Pittsburgh is more European that it was (certainly there are a lot more European style cars crawling about) or I've just gotten used to America (in the ten years since I've been to a lot other states, and some of them like Texas and Florida are as different from the East Coast as the East Coast is to the UK).

Hypertext is always a fun event and this year the conference was looking healthier than it has for a long time, good local organisation and an impressive conference dinner on Mount Washington overlooking the city certainly helped, but the main reason was probably that the CFP was broadened to include Social Linking. This has brought in a whole new side of the community, resulting in a great mix of papers. As I pointed out in a short paper of my own a few years ago, Web 2.0 style interaction was at the heart of Hypertext systems before there was even a Web 1.0, and so its strange that the conference doesn't already attract more people from the world of Blogs, Wikis, Tagging, Social Networking and so forth. Hopefully this event marks a turning point and the trend will continue in the future.

Defining Disciplines

My main role at this years conference was as the Workshops Chair, I also stood in for Weigang Wang as Chair for the Web Science Workshop, which was very well attended (20 people in total). Web Science is a new discipline proposed by Tim-Berners Lee, Wendy Hall and Others, and is concerned with the study of how the Web interacts with People and Society. I think its useful to think in terms of Web Science, but was aware that there a lot of people already working on topics in this area, and saw the workshop as a chance for them to get involved, and start to take some ownership of the idea.

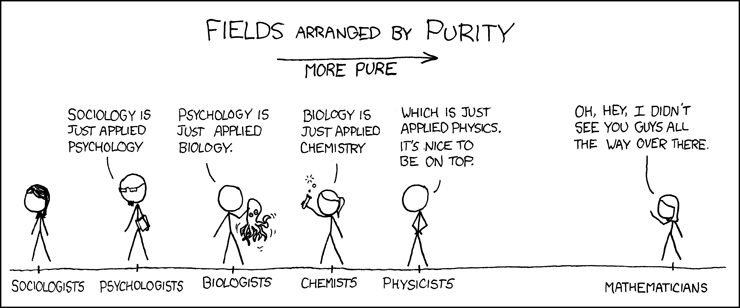

A variety of work was presented and we spent some time discussing the difficulty of defining a new discipline, with the observation that it is often as useful to think about what it isn't, as well as what it is.

My PhD student Charlie pointed me to the cartoon above that neatly summarises some of the difficulties. Defining disciplines certainly isn't easy, we even got sidetracked into a discussion about whether Computer Science was a subset of Information Science or if was it the other way around! For me the real challenge for Web Science will be when people start to design undergraduate courses, because at that point it needs to stand separately from other disciplines (and Computer Science in particular). At least in the meantime we know its a subset of Mathematics :-)

Does Hypertext Work?

Although he was beaten to the best paper award, I was most taken by David Kolb's excellent paper and presentation 'The Revenge of the Page' that examined the viability of complex hypertexts 'in the pitiless gaze of Google Analytics'. David had created a new complex hypertext work, available on the Web, with a mix of sophisticated hypertext patterns (of links) designed to affect the readers experience (using techniques such as juxtaposition and revisiting). However Google's stats told him that few visitors lasted more than a few seconds, the majority were coming via Google Images looking for photos, and even when these were factored out visitors only stayed for a minute or so. It certainly sparked some interesting conversations about the viability of nuanced hypertexts, the unexpected arrivals that result from search tool indexing, and whether hypertext is fighting a 'quick-fix' media culture that is prevalent on the Web, and may even be spreading into normal media.

It made me reflect on the sad state of Web-based hypertexts. I wonder if the problem is two-pronged, that most readers don't have any accessible examples of readable hypertexts, and that there are no popular tools to create hypertexts (saving perhaps Tinderbox).

The hypertext authoring tools that are used by millions of people are mostly Wikis and Blog editors, and these encourage only exit links created around a single article page (exit links are links that take the reader elsewhere, perhaps to supplementary material, rather than to another part of the same work).

For example, this is a pretty long blog entry, so why is it written as a single article, why not as a hypertext?

I suspect that along with the tools it is familiarity that breeds this kind of linear article. After all I spent my childhood writing linear stories, and my adulthood writing linear papers. There is a growing body of classic hypertext fiction, but most of it is challenging, and has never been seen by most readers. Maybe we need more easily accessible hypertext works (along the lines of 253) so that readers get used to seeing hypertexts, and understand what to do with them. In the end that is the only way that they are ever going to actually write them.

Trends

Despite David's gloomy experience the overall feeling of the conference was positive, however there was no Grand Vision underpinning the presentations, and much more analysis of what's already happening, rather than any looking forward to the next big thing. Perhaps the community has already been stung (Open Hypermedia for example), or maybe there is just so much activity in the Web 2.0 space already, that it's as much as we can do to monitor and evaluate things - without adding to the madness ourselves.

One topic that was very noticeable in its absence was the Semantic Web. In previous conferences much has been made of it, both in terms of a long term Web 3.0 candidate, but also in a number of practical applications. So why the low profile? Could it be that the Semantic Web has quietly arrived already, or is it that the world has moved onwards, and the Semantic Web is no longer a convincing vision?

My feeling is that the Semantic Web has already arrived, but with a whimper rather than a bang. Its concepts underpin a lot of the work that is happening in the Web 2.0 world (Semantic Wikis and Folksonomy research for example), and the standards are being used in anger for many knowledge-base systems and mash-ups, but it's not common enough for its use to be widely analysed. Perhaps it never will be.

I've been studying this stuff for long enough now to realise that sometimes a technology succeeds, and sometimes it merely inspires. What we have now isn't very Semantic, and its not really a Web either - but it is certainly in the spirit of the original vision (there's that word again).

So perhaps the relationship between the Semantic Web and Web 3.0 will be similar to the relationship between Hypertext Systems and Web 2.0. That would be interesting, as the genealogy (memealogy?) from Hypertext to Web 2.0 is rather tortuous, and full of painful extinction and reinvention. I suspect that the Semantic Web is rather better placed to be transformed from research darling into popular technology (due to a well defined stack of standards), and that Web Science may actually help the process.

The Hypertext conference is a good place to find out :-)

I've just got back from this years ACM Hypertext Conference in Pittsburgh, PA. Hypertext '98 was also in Pittsburgh, that was my first academic conference, and also my first trip to the States, so it was interesting to head back there. Pittsburgh seemed smaller this time around, and the culture shock was missing - either Pittsburgh is more European that it was (certainly there are a lot more European style cars crawling about) or I've just gotten used to America (in the ten years since I've been to a lot other states, and some of them like Texas and Florida are as different from the East Coast as the East Coast is to the UK).

Hypertext is always a fun event and this year the conference was looking healthier than it has for a long time, good local organisation and an impressive conference dinner on Mount Washington overlooking the city certainly helped, but the main reason was probably that the CFP was broadened to include Social Linking. This has brought in a whole new side of the community, resulting in a great mix of papers. As I pointed out in a short paper of my own a few years ago, Web 2.0 style interaction was at the heart of Hypertext systems before there was even a Web 1.0, and so its strange that the conference doesn't already attract more people from the world of Blogs, Wikis, Tagging, Social Networking and so forth. Hopefully this event marks a turning point and the trend will continue in the future.

Defining Disciplines

My main role at this years conference was as the Workshops Chair, I also stood in for Weigang Wang as Chair for the Web Science Workshop, which was very well attended (20 people in total). Web Science is a new discipline proposed by Tim-Berners Lee, Wendy Hall and Others, and is concerned with the study of how the Web interacts with People and Society. I think its useful to think in terms of Web Science, but was aware that there a lot of people already working on topics in this area, and saw the workshop as a chance for them to get involved, and start to take some ownership of the idea.

A variety of work was presented and we spent some time discussing the difficulty of defining a new discipline, with the observation that it is often as useful to think about what it isn't, as well as what it is.

My PhD student Charlie pointed me to the cartoon above that neatly summarises some of the difficulties. Defining disciplines certainly isn't easy, we even got sidetracked into a discussion about whether Computer Science was a subset of Information Science or if was it the other way around! For me the real challenge for Web Science will be when people start to design undergraduate courses, because at that point it needs to stand separately from other disciplines (and Computer Science in particular). At least in the meantime we know its a subset of Mathematics :-)

Does Hypertext Work?

Although he was beaten to the best paper award, I was most taken by David Kolb's excellent paper and presentation 'The Revenge of the Page' that examined the viability of complex hypertexts 'in the pitiless gaze of Google Analytics'. David had created a new complex hypertext work, available on the Web, with a mix of sophisticated hypertext patterns (of links) designed to affect the readers experience (using techniques such as juxtaposition and revisiting). However Google's stats told him that few visitors lasted more than a few seconds, the majority were coming via Google Images looking for photos, and even when these were factored out visitors only stayed for a minute or so. It certainly sparked some interesting conversations about the viability of nuanced hypertexts, the unexpected arrivals that result from search tool indexing, and whether hypertext is fighting a 'quick-fix' media culture that is prevalent on the Web, and may even be spreading into normal media.

It made me reflect on the sad state of Web-based hypertexts. I wonder if the problem is two-pronged, that most readers don't have any accessible examples of readable hypertexts, and that there are no popular tools to create hypertexts (saving perhaps Tinderbox).

The hypertext authoring tools that are used by millions of people are mostly Wikis and Blog editors, and these encourage only exit links created around a single article page (exit links are links that take the reader elsewhere, perhaps to supplementary material, rather than to another part of the same work).

For example, this is a pretty long blog entry, so why is it written as a single article, why not as a hypertext?

I suspect that along with the tools it is familiarity that breeds this kind of linear article. After all I spent my childhood writing linear stories, and my adulthood writing linear papers. There is a growing body of classic hypertext fiction, but most of it is challenging, and has never been seen by most readers. Maybe we need more easily accessible hypertext works (along the lines of 253) so that readers get used to seeing hypertexts, and understand what to do with them. In the end that is the only way that they are ever going to actually write them.

Trends

Despite David's gloomy experience the overall feeling of the conference was positive, however there was no Grand Vision underpinning the presentations, and much more analysis of what's already happening, rather than any looking forward to the next big thing. Perhaps the community has already been stung (Open Hypermedia for example), or maybe there is just so much activity in the Web 2.0 space already, that it's as much as we can do to monitor and evaluate things - without adding to the madness ourselves.

One topic that was very noticeable in its absence was the Semantic Web. In previous conferences much has been made of it, both in terms of a long term Web 3.0 candidate, but also in a number of practical applications. So why the low profile? Could it be that the Semantic Web has quietly arrived already, or is it that the world has moved onwards, and the Semantic Web is no longer a convincing vision?

My feeling is that the Semantic Web has already arrived, but with a whimper rather than a bang. Its concepts underpin a lot of the work that is happening in the Web 2.0 world (Semantic Wikis and Folksonomy research for example), and the standards are being used in anger for many knowledge-base systems and mash-ups, but it's not common enough for its use to be widely analysed. Perhaps it never will be.

I've been studying this stuff for long enough now to realise that sometimes a technology succeeds, and sometimes it merely inspires. What we have now isn't very Semantic, and its not really a Web either - but it is certainly in the spirit of the original vision (there's that word again).

So perhaps the relationship between the Semantic Web and Web 3.0 will be similar to the relationship between Hypertext Systems and Web 2.0. That would be interesting, as the genealogy (memealogy?) from Hypertext to Web 2.0 is rather tortuous, and full of painful extinction and reinvention. I suspect that the Semantic Web is rather better placed to be transformed from research darling into popular technology (due to a well defined stack of standards), and that Web Science may actually help the process.

The Hypertext conference is a good place to find out :-)

Labels:

academic,

hypertext,

semantic web,

web science

Thursday, June 12, 2008

Bon Jovi at Southampton

Last night I went to see Bon Jovi play at St. Mary's Stadium in Southampton - it was the first UK date of their Lost Highway Tour.

My wife Jo and I don't really share the same tastes in music, she likes unbearable cat stranglers like Westlife and Enrique Iglesias, and I like bearable but LOUD cat stranglers like Muse, Metallica and Nightwish. Bon Jovi manage to sit in the very small overlap in our tastes, jostling for space on a tiny soft rock ledge with bands like the Kaiser Chiefs and the Hoosiers.

I bought us seated tickets for the event, but this turned out to be a mistake as the organisers seemed to get a bit confused, and when we arrived it turned out that my tickets were for seats that were actually behind the stage. Exactly what sort of spectacle they were hoping to provide when all we could see was the back of a speaker stack was unclear. Luckily the marshals didn't seem too bothered by us moving around, so when the band started playing we moved to stand in a walkway where could see as well as hear them.

(more pics on the St. Mary's site)

A local band kicked things off (Hours Til Autumn), and they actually did a pretty good job, especially since this was their first gig with more than a 100 people (29,000 in the stadium). The big disappointment was with the major support act - The Feeling are supporting Bon Jovi for half of their UK gigs, and we were hoping for a surprise support act in Southampton (Nickelback did the honours for them back in 2006, and Razorlight are doing their Ireland dates, so we had high hopes). Sadly the surprise was that there was no support act at all, and Bon Jovi came on cold at 8pm.

It was a good set, with just the right mix of classic stuff from their big 80's and 90's albums, and the better new songs from the Lost Highways album, but without a big support act out front they started pretty slowly, and it took a good hour for things to really get going.

The last hour was damn fine though and they managed to lift the stadium despite the drizzle. In fact I've caught myself humming a number of embarrassing soft rock classics this morning, so something must have clicked :-)

So a good night, even if the stadium should be ashamed of selling unusable seats (especially when the event wasn't sold out!), and even if Bon Jovi had to be their own warm up artists. If you're thinking of getting tickets for any of there other gigs - go for the ones where The Feeling are playing. Not only will you get an extra hour of crooning, but you're more likely to have got into the swing of things by the time Bon Jovi start their set.

My wife Jo and I don't really share the same tastes in music, she likes unbearable cat stranglers like Westlife and Enrique Iglesias, and I like bearable but LOUD cat stranglers like Muse, Metallica and Nightwish. Bon Jovi manage to sit in the very small overlap in our tastes, jostling for space on a tiny soft rock ledge with bands like the Kaiser Chiefs and the Hoosiers.

I bought us seated tickets for the event, but this turned out to be a mistake as the organisers seemed to get a bit confused, and when we arrived it turned out that my tickets were for seats that were actually behind the stage. Exactly what sort of spectacle they were hoping to provide when all we could see was the back of a speaker stack was unclear. Luckily the marshals didn't seem too bothered by us moving around, so when the band started playing we moved to stand in a walkway where could see as well as hear them.

(more pics on the St. Mary's site)

A local band kicked things off (Hours Til Autumn), and they actually did a pretty good job, especially since this was their first gig with more than a 100 people (29,000 in the stadium). The big disappointment was with the major support act - The Feeling are supporting Bon Jovi for half of their UK gigs, and we were hoping for a surprise support act in Southampton (Nickelback did the honours for them back in 2006, and Razorlight are doing their Ireland dates, so we had high hopes). Sadly the surprise was that there was no support act at all, and Bon Jovi came on cold at 8pm.

It was a good set, with just the right mix of classic stuff from their big 80's and 90's albums, and the better new songs from the Lost Highways album, but without a big support act out front they started pretty slowly, and it took a good hour for things to really get going.

The last hour was damn fine though and they managed to lift the stadium despite the drizzle. In fact I've caught myself humming a number of embarrassing soft rock classics this morning, so something must have clicked :-)

So a good night, even if the stadium should be ashamed of selling unusable seats (especially when the event wasn't sold out!), and even if Bon Jovi had to be their own warm up artists. If you're thinking of getting tickets for any of there other gigs - go for the ones where The Feeling are playing. Not only will you get an extra hour of crooning, but you're more likely to have got into the swing of things by the time Bon Jovi start their set.

Subscribe to:

Posts (Atom)